Thursday, 2025-03-27

Building a CoAP application on Ariel OS

Ariel OS is an embedded operating system written in Rust, whose first version 0.1. was released recently. It is designed for "constrained devices" (a.k.a. "embedded devices" or "IoT devices"), that is, electronic devices with little power and storage – think "what is in your remote control" and not "what is on your computer". The Constrained Application Protocol (CoAP) is a networking protocol for the same group of devices. It is similar to HTTP in its semantics, but tuned to simple implementation and small messages that work over the most low-power networks.

Ariel OS logoThis tutorial guides you through first steps with both. It is recommended to be familiar with at least any one of software development on embedded systems, software development in Rust, or CoAP. The goal of the tutorial is to convey an understanding for what Ariel OS and CoAP can be used for, and to set up a playground for further exploration of either or both. It assumes that you are using a shell on a recent version of Debian GNU/Linux or Ubuntu, but should work just as well on many other platforms.

The tutorial is grouped into 4 parts:

- Getting started, where we set up hardware, software, and run Ariel OS for the first time;

- Building the application, where we look at how to start and how to use a CoAP server;

- Access control, where we put the S for Security into IoT; and

- Custom CoAP resources, where we explore how CoAP resources can be defined.

Shortcut

If you prefer to just get going without the explanations, the CoAP server example shipped with Ariel is similar to the late stages of this tutorial.

You will find more notes like this throughout the document. They offer additional exercises, further references and context or just debugging tips. Feel free to skip them unless you want to (or, because there is trouble, have to) spend more time on that particular section. They are not necessary to follow the flow of the tutorial. Treat them as side notes.

Getting started

Selecting the hardware

Ariel OS applications run on embedded systems; to get started, this works best with a development kit. Those usually come with an integrated programmer or bootloader.

To run the code of this tutorial, you will need an embedded development board supported by Ariel OS. The Ariel OS manual has an official list of candidates.

For this particular tutorial, the board needs to support

- some networking capability (current choices are Wi-Fi and Ethernet over USB),

- a hardware random number generator, and

- Ariel's storage module.

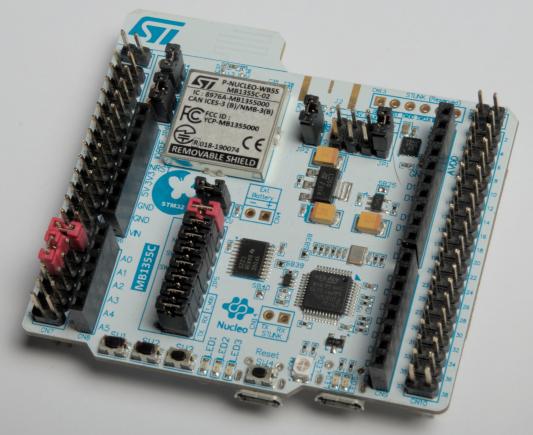

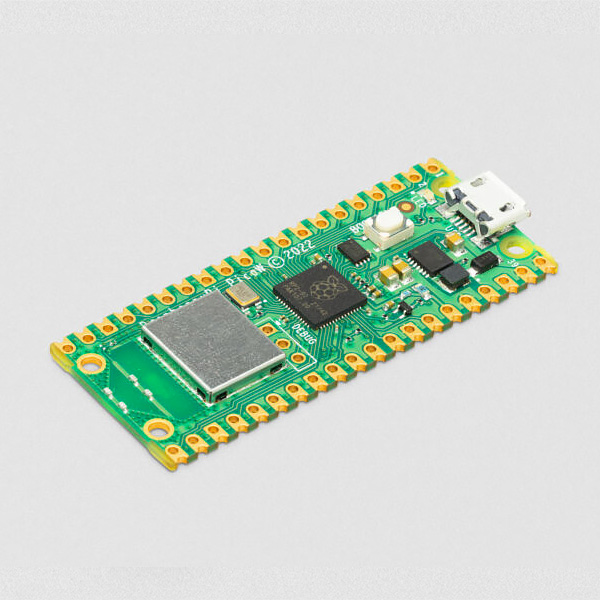

At the time of writing, candidate boards are the nRF52840 DK (nrf52840dk). the Raspberry Pi Pico (rpi-pico), Pico W (rpi-pico-w) or Pico 2, and the ST NUCLEO-H755ZI-Q/-WB55RG (st-nucleo-h755zi-q/st-nucleo-wb55).

The rest of the text will assume that you picked st-nucleo-wb55; if you pick any other, just replace that text with the name of your board (eg. nrf52840dk) wherever it occurs in the rest of the tutorial.

Our own application

Let's build our own application, and call it my-coap-server. The quickest way to do this is through cargo generate:

$ cargo generate --git https://github.com/ariel-os/ariel-os-template --name my-coap-server

"But I don't want no script-generated auto-scaffolding that invites cargo culting!"

No problem. All files needed will be mentioned and quoted in full as part of the tutorial (at latest in the appendix), you can create them all yourself.

You can also start from cloning the ariel-os-hello git template, which at the time of writing is virtually identical to the output of cargo generate.

Let's try that out by running it:

$ cd my-coap-server $ laze build -b st-nucleo-wb55 run ⟦...⟧ Compiling ariel-os-utils v0.1.0 ([...]) ⟦...⟧ Programming ✔ 100% [####################] 16.00 KiB @ 47.98 KiB/s (took 0s) Finished in 0.89s [INFO ] Hello from main()! Running on a st-nucleo-wb55 board.

Doesn't work? Let's help us fix that.

Drop by on our chat, or open an issue in our issue tracker, and we'll have a look at it together.

Once you see the "Hello from main()" line, we have shown that

- building Rust programs works,

- the tools are in place to flash the program onto your embedded device, and

- the program runs successfully to print "Hello".

Great: You have just run a program on Ariel OS!

For the nitpickers

At least in the st-nulceo-wb55 default configuration, our program does not really print (or even store) any "Hello" message, because our debug console mostly logs through defmt. With that, our program just decides when to print a particular text and sends a short number representing it; the host system then looks up that number in the compiled program.

Building the application

Let's customize our application. We start from the template src/main.rs that initially looks like this:

#![no_main] #![no_std] #![feature(impl_trait_in_assoc_type)] #![feature(type_alias_impl_trait)] #![feature(used_with_arg)] use ariel_os::debug::{exit, log::info, ExitCode}; #[ariel_os::task(autostart)] async fn main() { info!( "Hello from main()! Running on a {} board.", ariel_os::buildinfo::BOARD ); exit(ExitCode::SUCCESS); }

The first block of lines (#![…) tells Rust that we're not exactly building an everyday PC program. The Rust documentation has links for them all. Don't get too lost there: The Ariel OS maintainers aim to reduce that kind of boilerplate to a minimum over time. The use line is Rust's mechanism to import short names from namespaces, and #[ariel_os::task(autostart)] tells the OS to run this function when the board is booted up.

We want to run a service on the network, so "print some text and terminate execution" is what we'll have to replace.

Side quest

If you are new to embedded or Rust development, you may want to pause here and play around. Many introductory exercises (such as the first steps of the Rust book and many popular exercises). Note that we have explicitly imported an info! macro. You can use that in a way very similar way to the println! that is often seen in tutorials, or simply use Ariel's own println! through an extra import of use ariel_os::debug::println;.

First custom code

Instead of the info and the exit, let's launch a CoAP server, and thus start exploring the network.

A CoAP server, like any web server, needs to be told what to respond to clients, and at which path. We can build a very boring server out of a few lines that replace the content of the main function:

use coap_handler_implementations::{new_dispatcher, HandlerBuilder, SimpleRendered}; let handler = new_dispatcher() .at(&[], SimpleRendered("Hello from my CoAP server")) .at(&["private", "code"], SimpleRendered("The password is 'mellon'.")); ariel_os::coap::coap_run(handler).await;

The coap_run() function takes any implementation of the CoAP interfaces we use, and runs that as a network server indefinitely. The implementations we pick are assembled from a CoAP handler implementations crate that aims to make frequent simple handlers easy to implement.

Dependency handling

Building this would fail: We start using features and crates we did not enable before.

Making the coap_handler_implementations parts work is classical Rust dependency handling – just add this line to Cargo.toml (and with three more so we don't have to come back here later):

[package] name = "my_coap_server" version = "0.1.0" edition = "2021" [dependencies] ariel-os = { path = "build/imports/ariel-os/src/ariel-os", features = [] } ariel-os-boards = { path = "build/imports/ariel-os/src/ariel-os-boards" } +coap-handler-implementations = "0.5.2" + +minicbor = { version = "0.24", features = ["derive"] } +coap-handler = "0.2.0" +coap-numbers = "0.2.6"

Enabling the ariel_os::coap module follows a different workflow than just enabling features. It uses features of the laze build system to not just enable statically additive features, but to make decisions based on the available modules on where to go. In this concrete case, adding CoAP support pulls in UDP and IP support, and thus network interfaces – and which network interface implementation is used depends on the board. For the rpi-pico-w, this would be Wi-Fi support, but for the st-nucleo-wb55, this is USB Ethernet in the current Ariel release. Cargo currently has no means of handling such situations on its own. We tell laze to pull the right strings in Ariel's setup by adding these lines to laze-project.yml:

imports: - git: url: https://github.com/ariel-os/ariel-os commit: 3e97d77ecf775824d98c0361bbba2e08aac24298 dldir: ariel-os apps: - name: my_coap_server + selects: + - coap-server + - ?coap-server-config-unprotected # We'll come back to this!

You can see how, for your specific board, the output of

$ laze build -b st-nucleo-wb55 info-modules

grows from before to after you added those lines.

Unprotected access? Really? Is it the '90s again?

Please bear with me on two aspects:

- We'll get to it, right one section down.

- There is a plan for secure setups without any intervention at all that needs some enhanced tool support.

A first CoAP request

Time to run a first CoAP server:

$ laze build -b st-nucleo-wb55 run ⟦...⟧ Compiling ariel-os-utils v0.1.0 ([...]) ⟦...⟧ Programming ✔ 100% [####################] 117.00 KiB @ 25.43 KiB/s (took 5s) Finished in 6.16s [INFO ] Hello from main()! Running on a st-nucleo-wb55 board. ⟦...⟧ ⟦... possibly a few seconds pass ...⟧ ⟦...⟧ [INFO ] IPv4: UP [INFO ] IP address: 10.42.0.175/24 [INFO ] Default gateway: Some(10.42.0.1) [INFO ] IPv6: DOWN [INFO ] Starting up CoAP server [INFO ] Server is ready.

All looks ready – the precise network configuration you see will depend on your board and network.

Not getting there?

On boards that default to IP over USB, check whether both USB connectors are attached to your computer.

Then, check your network manager: If a new USB Ethernet device has just popped up, edit its IPv4 and IPv6 settings to "Shared to other computers".

On Wi-Fi devices, you'll need to set the Wi-Fi credentials in an environment variable.

IPv4? Really? Is it the '90s again?

We are working on it. (Basic support is there, but enabling IPv6 without at least support for SLAAC, which is what we are working on, is a bit pointless).

Once you see an address, open another terminal. We'll use the tool aiocoap-client, which is invoked similarly to the tool curl you may know from HTTP. Run this command, replacing the IP address with the one from your console output from just before:

$ aiocoap-client coap://10.42.0.175/ Hello from my CoAP server

You just accessed your constrained device over the network.

Just like in HTTP, we address any resources on the device by URLs; they just use the scheme coap rather than https. Also like in HTTP, the method we usually invoke is called GET, which is the default in the client tools, and requests a "representation" of that resource. That representation, the "Hello" text, is what is being printed. As the server indicates a plain text response through the respone's media type "text/plain", the client shows the text as-is.

Exceeding what is provided by default in HTTP, CoAP also has built-in site map, which we can access like this:

$ aiocoap-client coap://10.42.0.175/.well-known/core # application/link-format content was re-formatted <>, </private/code>

Individual resources are distinguished by their path on the server; the server is free to choose paths (outside of the /.well-known/ prefix reserverd by the CoAP specification to features such as the site map). Here we see one more resource we did not use before:

$ aiocoap-client coap://10.42.0.175/private/code The password is 'mellon'.

The array ["private", "code"] of the source code is shown as the path /private/code here. The representation in the source code is closer to how paths are actually expressed in CoAP: CoAP avoids text proceesing, and instead puts the path in separate options ("options" here are CoAP's parameter fields, similar to HTTP's headers). This is most easily seen in the client's verbose output, which shows a human-readable representation of the messages that is closer to the actual binary data than just the URI:

$ aiocoap-client coap://10.42.0.175/.well-known/core INFO:coap.aiocoap-client:Sending request: INFO:coap.aiocoap-client:GET to coap://10.42.0.175 INFO:coap.aiocoap-client:- Uri-Path (11): '.well-known' INFO:coap.aiocoap-client:- Uri-Path (11): 'core' INFO:coap.aiocoap-client:No payload INFO:coap.aiocoap-client:Received response: INFO:coap.aiocoap-client:2.05 Content from coap://10.42.0.175 INFO:coap.aiocoap-client:- Etag (4): b'\xf1\xd2\x97\x13<\xc93)' INFO:coap.aiocoap-client:- Content-Format (12): <ContentFormat 40, media_type='application/link-format'> INFO:coap.aiocoap-client:Payload: 3c3e3c2f612f623e (8 bytes) # application/link-format content was re-formatted <>, </private/code>

Access control, or putting the S for Security into IoT

We have two severe issues in this setup:

- We can't know whether our client is really talking to our board, and

- there is a "secret" sent around without any protection.

Not surprising, though: We left this open in the configuration when we said "unprotected". Let's fix that in laze-project.yml:

selects: - coap-server - - ?coap-server-config-unprotected # We'll come back to this! + - ?coap-server-config-storage

Limiting access

Create a new file called peers.yml next to that laze file:

- from: unauthenticated scope: /.well-known/core: GET /: GET

As the coap-server-config-storage policy is now used, this file is consulted to decide how to populate the device's storage initially. This ultimately guides its decision process on what to do on incoming requests. Access is denied unless explicitly allowed for a given peer; in this case, we allow any peer (from: unauthenticated) read-only access to the sitemap and the / (root) resource.

Run laze again as before to flash the new firmware. Once the firmware is ready, try aiocoap again:

$ aiocoap-client coap://10.42.0.175/private/code 4.01 Unauthorized

This fixed issue 2, but opens a new issue 3: Not even we can access our own resource! Let's leave this aside for a moment and look at issue 1.

For curious readers

The Wireshark network sniffer can decode CoAP requests. If you run the previous examples again while monitoring your network traffic, you will see all details of your CoAP requests visualized. Be sure to select the right network interface. If you are using Wi-Fi on the board, you may want to use Wireshark's view filter and put coap in there to see only relevant packages.

Authentication

Ariel OS's CoAP stack supports several security mechanisms that eventually set up OSCORE; the manual enumerates them, and gives links and context. For this tutorial, we focus on using EDHOC, which efficiently establishes security for constrained devices from public key cryptography. EDHOC has all the desirable properties of modern Internet communication: It encrypts messages, protects them from manipulation, and gives client and server the tools to be sure of whom they are communicating with. None of this needs to trust the network: As far as secure communication is concerned, requests could be happening across the Internet. Anyone looking at the messages can just learn that a server and a client communicate from their given addresses (metadata), request sizes and timing, but not what the content of their communication is; anyone attempting to manipulate the exchange can at worst keep the peers from communicating, but can not alter their messages' content.

For following the tutorial, it will suffice to understand that both participants in our communication (i.e., the device and your PC) will eventually each have a secret key (which never leaves the device and is never shown on screen) and a public key, which can freely be shown to anyone.

Back to our device:

Since we enabled the coap-server-config-storage module, a new line is shown at startup:

[INFO ] CoAP server identity: [a1, 08, a1, 01, a4, 01, 02, 02, 40, 20, 01, 21, 58, 20, ca, 0c, f3, eb, cc, 42, 3d, 5c, 5b, 53, b9, 2b, 20, 2e, fe, 25, ba, 8e, 58, 3d, 4d, 40, 8e, 64, 7c, f1, 39, 59, 06, 59, d0, 88]

This is a public key of our device (precisely, a CCS (CWT Claims Set), which contains a COSE key). It was generated at first startup inside the device, and persisted across reboots, at least until there are major changes to the firmware.

We can use this public key to tell our client application whom we expect to talk to.

We are working in Ariel OS to get that line copy-pasteable more easily; until then, you can convert it to a usable format. Run this command, copy-paste the inner parts of the CoAP server identity in your device's output, and press Ctrl-D (twice if needed) to get the array output:

$ cbor-edn cbor2diag -i hex a1, 08, a1, 01, a4, 01, 02, 02, 40, 20, 01, 21, 58, 20, ca, 0c, f3, eb, cc, 42, 3d, 5c, 5b, 53, b9, 2b, 20, 2e, fe, 25, ba, 8e, 58, 3d, 4d, 40, 8e, 64, 7c, f1, 39, 59, 06, 59, d0, 88 ⟦ press Ctrl-D here ⟧ { 8:{ 1:{ 1:2, 2:h'', -1:1, -2:h'ca0cf3ebcc423d5c5b53b92b202efe25ba8e583d4d408e647cf139590659d088' } } }

Create a new file client.diag file like below, customizing

- the top map key: your device's URL, plus the wildcard indicating it applies to all resources on there, and

- peer_cred: the COSE data from above, wrapped in {14: …} as shown:

{

"coap://10.42.0.175/*": {

"edhoc-oscore": {

"suite": 2,

"method": 3,

"own_cred": {"unauthenticated": true}, / Once more, we will come back to this /

"peer_cred": {14: {8: {1: {1: 2, 2: h'', -1: 1,

-2: h'ca0cf3ebcc423d5c5b53b92b202efe25ba8e583d4d408e647cf139590659d088'}}}},

}

},

}

That doesn't look like valid JSON.

Right: It isn't. Constrained devices don't do string processing well, and text labels can become a huge overhead on networks where every byte counts. The data is encoded in a human readable form of CBOR, which is to JSON like CoAP is to HTTP. If you are familiar with JOSE, most prominently known from JWTs, you will find the same concepts but compact and binary in COSE (that is what you copy-pasted above), and there are even CWTs, which are supported by Ariel OS but not used in this tutorial.

Now that we can describe whom we expect to talk to, let's tell that to our client in the next request:

$ aiocoap-client coap://10.42.0.175/ --credentials client.diag Hello from my CoAP server

Potential footgun

Take care with the {14:…} around the {8:…}: That needs to stay there; if your request is not successful, check again whether your peer_cred still is of the same shape as in the listing above.

We have thus addressed issue 1, and can be sure we talk to the right device. This is already similar to what you get on your typical browser's HTTPS connection: the client knows who the server is, and the server talks to any client. The difference is that unlike for domain names, where a certificate hierarchy is installed in the browser and any of many organizations has performed some checks to ensure the server operator "owns" the domain, there is no such hierarchy in IP addresses. Instead, we have manually accepted the server's public key, like we might accept a self-signed certificate in a web browser.

You don't own it until you break it.

Try changing any bit in the peer_cred structure, or switch to a different embedded device (just be sure to update the address or the configuration will not be applied), and the request will fail. You may observe different modes of failure: For example, arbitrary modifications to the long hex string (the public key) even fail before a request is sent (because it is not even a valid public key). To get to a failure mode where things work up to the point where the cryptographic checks reject them, you can use the tutorial's hex public key – there is no chance that that is your own device's key.

Things may also fail with the same device if you change the firmware too much; with the current version of Ariel, storage may or may not persist through a firmware update.

Mutual authentication, and authorization

The last item to tackle is accessing restricted resources, allowing only you read the /private/code resource.

To define what "you" means, you will need to create a secret key on the PC – previously, the client created a random one for every request.

Run this to create a new key, which gets stored in myself.cosekey:

$ aiocoap-keygen generate myself.cosekey --kid 01 {14:{8:{1:{1:2,2:h'01',-1:1,-2:h'bcd82eeaa8956d4eb4179eae5c0c425055f848d3b9116c50fa9bacc0f882bd31', -3:h'7e862424798d289cf130ff69a47918c5120bd1d8721bd1030c050ee4521d874e'}}}}

This writes a secret key to a file readable only by you, and outputs a public key in the COSE format you are now already familiar with. A change to client.diag tells aiocoap to use this key to talk to this device; as always, put in the values you had in your previous output instead of the example data:

"method": 3, - "own_cred": {"unauthenticated": true}, + "own_cred_style": "by-key-id", / This is why we put --kid in; had we not, we'd put "by-value" and send more data. / + "own_cred": {14:{8:{1:{1:2,2:h'01',-1:1,-2:h'bcd82eeaa8956d4eb4179eae5c0c425055f848d3b9116c50fa9bacc0f882bd31', + -3:h'7e862424798d289cf130ff69a47918c5120bd1d8721bd1030c050ee4521d874e'}}}}, + "private_key_file": "myself.cosekey", "peer_cred": {14: {8: {1: {1: 2, 2: h'', -1: 1, -2: h'ca0cf3ebcc423d5c5b53b92b202efe25ba8e583d4d408e647cf139590659d088'}}}},

We also need to tell our firmware by adding these lines to peers.yml:

- kccs: | {8:{1:{1:2,2:h'01',-1:1,-2:h'ec1d9db0b8eb9672802808b7729712b97f9d0acf15cf30eeab60c63ddc38f660', -3:h'bc61c6a75a242eb7724edc0664c20d530e6f5ba43e8a8e02c0836dacb9199a74'}}} scope: allow-all

Remember the footgun above?

It's right there again, in the other direction: the peers.yml does not take the outer {14:…} layer. Work is ongoing to harmonize this between the tools involved. For background, aiocoap treats credentials to be a COSE header map (where key 14 indicates a kCCS), whereas Ariel OS's coapcore and the underlying Lakers libraries work more in terms of CCSs as stand-alone entities.

Instead of allow-all, you could just as well enumerate paths explicitly – either way, you (and only you) now have access to all resources.

Flash the firmware, and try it out:

$ aiocoap-client coap://10.42.0.175/private/code --credentials client.diag The password is 'mellon'.

Custom CoAP resources

So far, we have registered two resources in our CoAP handler, both using the SimpleRendered implementation. You could write arbitrary CoAP handlers by implementing the coap_handler::Handler trait manually – but while that offers full control, it is also a tedious exercise.

Instead, let's look at a few other easy handlers to add.

Text on the fly

While plain text is definitely not embedded devices' forte, it is a simple first example.

Let's think of our system as some kind of toy motor control. Actually controlling a motor would excede the scope of this tutorial; this is going to be a pretend motor.

Put this code anywhere in the src/main.rs file outside the main function, eg. above it:

use core::cell::Cell; #[derive(Debug, Copy, Clone)] enum MotorState { Stop, Forward, Reverse, } struct ShowStatus<'a> { motor: &'a Cell<MotorState>, endstop: &'a Cell<u32>, } impl coap_handler_implementations::SimpleRenderable for ShowStatus<'_> { fn render<W: core::fmt::Write>(&mut self, w: &mut W) { writeln!(w, "Current motor status: {:?}", self.motor.get()).unwrap(); for _ in 0..self.endstop.get() { writeln!(w, "The endstop has been triggered.").unwrap(); } } }

First, we define which states a motor can be in: forward, reverse or stopped.

Then, we describe a new type of handler, ShowStatus, that will eventually show the state of a system composed of a motor state and a the number of times an endstop has been triggered. Rust has a strict model of ownership: By default, one component can be in control of any item at any time. The Cell that both the MotorState and the 32-bit integer are wrapped in describes that this is an item that is shared by multiple parties (but small enough that they can just always copy it around, which is why we derive Copy and Clone on the motor state). The 'a are lifetimes to the shared reference the status holds; the Rust book has a chapter on them, but for here it suffices to say that they ensure that the handler is not around for longer than the motor state.

Finally, we describe how such a resource acts as a CoAP resource. Rather than implementing the relatively low-level Handler, we implement an interface (a Trait) that is more high-level, and easy to use for text based applications: SimpleRenderable. This requires us to describe how the handler is rendered into a writer (the compiler promises us that this will work for any W), which we do by using the write! macro, which is similar to println! or info! from earlier. (This is where the Debug derive is needed for: it gives the motor state a convenient text representation that can be reported).

Note that rather than printing a number for endstop events, we print a new line for every single time it happened. This is not a good design, but convenient for the end of the tutorial. By then, we will also see why writing can never fail, and the dreaded .unwrap() is acceptable here.

We've seen one implementation thereof before: Strings (&str) are also SimpleRenderable, and we used them wrapped in SimpleRendered to turn them into full handlers. We can use the motor controller the same way to hook it into our resource list:

+ let motor = Cell::new(MotorState::Stop); + let endstop = Cell::new(0); let handler = new_dispatcher() + .at(&["status"], SimpleRendered(ShowStatus { motor: &motor, endstop: &endstop })) .at(&[], SimpleRendered("Hello from my CoAP server"))

We don't need to update the security policy at this time because your PC's key is already allowed to access all resources. Run laze again and look at the system's state:

$ aiocoap-client coap://10.42.0.175/status --credentials client.diag Current motor status: Stop

Actions and encodings

So far, this motor stays off. Let's add some resource for machine-to-machine motor control, where a concise binary representation of the motor's state can be read or written:

use coap_handler_implementations::{TypeHandler, TypeRenderable}; struct MotorControl<'a> { motor: &'a Cell<MotorState>, } impl<'a> TypeRenderable for MotorControl<'a> { type Get = MotorState; type Post = (); type Put = MotorState; fn get(&mut self) -> Result<MotorState, u8> { Ok(self.motor.get()) } fn put(&mut self, representation: &MotorState) -> u8 { match *representation { MotorState::Stop => info!("Stopping the motor"), MotorState::Forward | MotorState::Reverse => info!("Starting the motor"), } self.motor.set(*representation); coap_numbers::code::CHANGED } }

Unlike with the SimpleRenderable inteface we used before, in TypeRenderable we do not spell out how to render something to a GET request, but we specify what to render. The "how" is then addressed by a yet to be chosen serializer. We do have to state through which types that conversion goes explicitly; the type … = …; lines do this even for the POST method, which we do not use here. (A planned Rust feature will eventually remove the need to do this).

For this example we pick the minicbor serializer, which converts our motor state to and from a CBOR item. CBOR is short for Concise Binary Object Representation, and brings the ad-hoc usability of JSON and its data model to embedded systems.

In order for minicbor to understand how to serialize our motor state, we have to extend its definition:

#[derive(Debug, Copy, Clone)] +#[derive(minicbor::Encode, minicbor::Decode)] +#[cbor(index_only)] enum MotorState { + #[n(0)] Stop, + #[n(1)] Forward, + #[n(2)] Reverse, }

This means that our state will be expressed as a simple 1-byte integer. The minicbor documentation has the details on what the added lines mean precisely, and how it is applied to any more complex objects you may encounter when extending this.

In parallel to a GET method, we also added a PUT method, which finally gives us control of the motor. The PUT method is commonly used in HTTP and CoAP to update a resource to some new desired state: the client just sends the data it wants there to be in the resource.

The PUT implementation produces some log messages that will be shown on the console; this stands in for any real motor control that might happen instead. After changing the value, we explicitly tell which CoAP response code to send. The need to do this comes from the implementation choices of the TypeRenderable interface: In CoAP itself, every response has its status code – it's just that TypeRenderable knows which one to send for a successful GET, but not for PUT.

Wiring it up, we need to specify two more pieces of information: which serializable interface we implented (minicbor version 0.24, this can not be inferred by the compiler) and that there is any additional metadata we want to advertise (unlike SimpleRendered, this type does not provide any defaults for discovery; the resource type here will be shown in the site map and would thus be useful in automation).

.at(&["status"], SimpleRendered(ShowStatus { motor: &motor, endstop: &endstop })) + .at_with_attributes( + &["m"], + &[coap_handler::Attribute::ResourceType("tag:example.com,2025,motor")], + TypeHandler::new_minicbor_0_24(MotorControl { motor: &motor }) + ) .at(&[], SimpleRendered("Hello from my CoAP server"))

Let's try it out – obtain the old value, and set a new one:

$ aiocoap-client coap://10.42.0.175/m --credentials client.diag # CBOR message shown in Diagnostic Notation 0 $ aiocoap-client coap://10.42.0.175/status --credentials client.diag Current motor status: Stop $ aiocoap-client coap://10.42.0.175/m --credentials client.diag -m PUT --payload 2 --content-format application/cbor $ aiocoap-client coap://10.42.0.175/m --credentials client.diag # CBOR message shown in Diagnostic Notation 2 $ aiocoap-client coap://10.42.0.175/status --credentials client.diag Current motor status: Reverse

Got EDHOCError::MacVerificationFailed?

If this error comes up, the firmware size changed too much, and the previous device identity got overwritten. You can update your client.cred with the new identity by repeating the step from the Authentication chapter.

For sending the request to update the parameter, the client needed three new parameters:

- That we want to PUT data. (This is not the only option do send data, we'll see POST later).

- Which payload to send: As per the description of motor state, 2 means "reverse".

- How to send the data: While on incoming data the client can recognize the media type sent along with the data, and could turn the binary data into human readable numbers (this is what the # CBOR message shown in Diagnostic Notation tells), we need to be explicit here to allow the tool to convert from a human-readable version into the right binary representation.

Looking over to the device's debug output, you will now also see lines such as:

[INFO ] Starting the motor

As a last resource, let's add an endstop – a trigger that might prevent a roller blind from going too far:

struct EndstopControl<'a> { motor: &'a Cell<MotorState>, endstop: &'a Cell<u32>, } impl<'a> TypeRenderable for EndstopControl<'a> { type Get = (); type Post = coap_handler_implementations::Empty; type Put = (); fn post(&mut self, _representation: &Self::Post) -> u8 { self.motor.set(MotorState::Stop); self.endstop.set(self.endstop.get() + 1); info!("Endstop hit!"); coap_numbers::code::CHANGED } }

+ .at_with_attributes( + &["e"], + &[coap_handler::Attribute::ResourceType("tag:example.com,2025,endstop")], + TypeHandler::new_minicbor_0_24(EndstopControl { endstop: &endstop, motor: &motor }) + )

Here, we expose only a POST method, which (both in HTTP and CoAP) may be used with or without a payload (here we use it without any). It has no such clear universal semantics as PUT, but generally means "do something with this now". For the resource type we define here, its meaning shall be "Stop the motor, the endstop contact was hit".

In a networked environment, this is something for which we could use more fine-grained control: If the endstop contact is connected through a network, we can let that component trigger a motor stop, but it must not start the motor on its own.

To simulate working on a new system, create a new credentials file endstop-client.diag along with an own key for that (you can look back to the Mutual authentication, and authorization chapter for details).

A peers.yml file can now look like this:

- from: unauthenticated scope: /.well-known/core: GET /: GET /summary: GET # Your own key - kccs: | {8:{1:{1:2,2:h'01',-1:1,-2:h'ec1d9db0b8eb9672802808b7729712b97f9d0acf15cf30eeab60c63ddc38f660', -3:h'bc61c6a75a242eb7724edc0664c20d530e6f5ba43e8a8e02c0836dacb9199a74'}}} scope: /.well-known/core: GET /summary: GET /m: [GET, PUT] # The key for the endstop client - kccs: | {8:{1:{1:2,2:h'01',-1:1,-2:h'b59cd78510257f61529e0f914fdb2390d258c8c212cf3a74e71e8b61b62324bb', -3:h'2ac3e574898dc16b89692678af9e3950d5e46bf786b8dd8dd081091befdfe785'}}} scope: /.well-known/core: GET /e: POST

An endstop client is now limited to discovering and performing the one task it is desigend to be:

$ aiocoap-client coap://10.42.0.175/.well-known/core --credentials client.diag # application/link-format content was re-formatted </status>, </m>; rt="tag:example.com,2025,motor", </e>; rt="tag:example.com,2025,endstop", <>, </private/code> $ aiocoap-client coap://10.42.0.175/e --credentials endstop-client.diag -m POST $ aiocoap-client coap://10.42.0.175/e --credentials endstop-client.diag -m POST $ aiocoap-client coap://10.42.0.175/m --credentials endstop-client.diag 4.01 Unauthorized

One more neat CoAP feature

Remember the /status resource that kept printing an entry for every endstop contact? CoAP is built on UDP and hesigned for small messages, so what happens when that exceeds the typical size of a UDP message (about 1 kilobyte)?

There is a CoAP mechanism called "block-wise transfer" that enables simple transfer of large resources by requesting them one chunk at a time, and re-assembling them (with checks in place that detect when the whole resource changed). While not available in all handler types, the SimpleRendered family of implementation just takes care of this. Any prints beyond the end of the current chunk are mostly discarded, so writes in there can never fail – they just need more and more packets to view the whole item.

Try it out: Trigger the endstop several times. The client will automatically assemble the response and make no big fuss about it, but eventually, a single CoAP "Block2" option in aiocoap's verbose output will give you a hint that block-wise transfer has happened:

$ aiocoap-client coap://10.42.0.175/status --credentials client.diag -v INFO:coap.aiocoap-client:Sending request: INFO:coap.aiocoap-client:GET to coap://10.42.0.175 INFO:coap.aiocoap-client:- Uri-Path (11): 'status' INFO:coap.aiocoap-client:No payload INFO:coap.aiocoap-client:Received response: INFO:coap.aiocoap-client:2.05 Content from coap://10.42.0.175 INFO:coap.aiocoap-client:- Etag (4): b'\x87\x06\xa3\xa1I\xf8X\xb7' INFO:coap.aiocoap-client:- Block2 (23): BlockwiseTuple(block_number=1, more=False, size_exponent=6) INFO:coap.aiocoap-client:Payload: 43757272656e74206d6f746f72207374... (1403 bytes total) Current motor status: Stop The endstop has been triggered. The endstop has been triggered. ⟦...⟧

Summary

This concludes the tutorial. In summary, you can now:

- Build your own applications with Ariel OS, and install them on a device;

- build CoAP server applications, and use them from different computers; and

- understand who can access your devices, and make your own choices about it.

Further resources to explore from here:

- The Ariel OS book provides an overview over other features the operating system provides.

- The coap-handler-implementations crate introduces many more helpers for building CoAP systems on this tutorial. The coap-message-demos crate contains several examples of how to use them.

- coap.space links to many more CoAP tools.

- All CoAP components presented here also work on other CoAP libraries, such as coap-rs. The coapcore crate that is developed as part of Ariel OS does the heavy lifting on the security parts, and can be used with many outside scenarios.

- More and updated tutorials will be announced on the Ariel OS Mastodon account.

Have fun exploring them!

Appendices

Working without user-installed tools

None of the tools "needed" for this tutorial are completely irreplacible:

- cargo-generate: This is just used to generate usable files as a starting point. Instead of using it, you can create all files in their mentioned locations manually as they are listed throughout the tutorial, including in the next section.

- cbor-edn: There is an online service at https://cbor.me/ that can perform the same conversions as this tool. Alternatively, the cbor-diag Ruby gem provides equivalent functionality.

- aiocoap: Instead of installing, you can run the tool from a cache by replacing all calls to aiocoap with pipx run --spec "aiocoap[oscore,prettyprint]" aiocoap-client, or install aiocoap in a Python virtual environment. An alternative CoAP client is libcoap's coap-client, which at the time of writing does not support EDHOC security.

The tools required for Ariel OS itself (described in its book's chapter on installing the build tools) are not so easily replaced, but rustup is by now part of many Linux distributions. For laze and probe-rs-tools, there are currently no known alternatives to installing them from source.

Fine print: The files we skipped

If you go through the tutorial without cargo generate, or are curious about the other files, here is what we did not touch. Alternatively, you can get them from the ariel-os-hello git template.

rust-toolchain.toml: So far, Ariel needs a nightly toolchain; while "latest nightly" usually works, we pin it to a specific nightly to avoid surprises. The file also lists targets (CPU types) that rustup should download, and components that are needed for some optimized builds.

[toolchain] # this should match the toolchain as pinned by ariel-os channel = "nightly-2024-12-10" targets = [ "thumbv6m-none-eabi", "thumbv7m-none-eabi", "thumbv7em-none-eabi", "thumbv7em-none-eabihf", "thumbv8m.main-none-eabi", "riscv32imc-unknown-none-elf", "riscv32imac-unknown-none-elf", ] components = ["rust-src"]

.cargo/config.toml: Just like it depends on unstable Cargo features, Ariel also applies modifications to existing crates – we aim to upstream all those, but often use the changes before the PR is merged in the original project (both to keep development swift and to thoroughly test the changes we propose).

The Cargo configuration pulls in a bunch of these overrides from Ariel's ariel-os-cargo.toml file. That file also contains convenient updates to default optimization levels.

include = "../build/imports/ariel-os/ariel-os-cargo.toml" [unstable] # This is needed so the "include" statement above works. config-include = true

About this tutorial

Written 2025-03 by Christian Amsüss chrysn@fsfe.org, published under the same license terms as Ariel OS (MIT or Apache-2.0).

Different terms apply to the images used:

- Ariel logo: Apache-2.0 or MIT, 2020-2025 Freie Universität Berlin, Inria, Kaspar Schleiser, <https://github.com/ariel-os/ariel-os/>

- STM32 photo: same as the text

- Raspberry Pico W: CC-BY-2.0, 2022 SparkFun Electronics, <https://commons.wikimedia.org/wiki/File:RPI_PICO_W_1.jpg>

The author is a contributor to the Ariel OS project; the views expressed in this blog post are his, and do not reflect any consensus or opinions of the Ariel community.

| Tags: | blog-chrysn |

|---|

Thursday, 2020-09-24

Rust in 2021: Leveraging the Type System for Infallible Message Buffers

The Rust programming language features a versatile type system. It gives memory safety by distinguishing between raw pointers, pointers to valid data and pointers to data that may be written to. It helps with concurrency by marking types that may be moved between threads. And it helps keep API users on the right track with typestate programming.

With some features of current nightly builds, this concept can be extended to statically check that a function will succeed. Let's explore that road!

Current state: Fallible message serialization

For a close-to-real-world example, we will look into how CoAP messages are built on embedded devices. CoAP is a networking protocol designed for the smallest of devices, and enables REST style applications on even the tiniest of devices with less than 100KiB of flash memory. The devices can take both the server and the client role.

Writing a response message nowadays may[1] look like this:

fn build_response<W: WritableMessage>(&self, request_data: ..., response: W) -> Result<(), W::Error> {

let chunk = self.data.view(request_data.block);

match chunk {

Ok((payload, option)) => {

message.set_code(code::Content);

message.add_opaque_option(option::ETag, &self.etag)?;

message.add_block_option(option::Block2, option);

message.add_payload(payload)?;

}

Err(_) => {

message.set_code(code::BadRequest);

}

};

Ok(())

}

As these devices typically don't come with dynamic management of the little RAM they have, it is common to build responses right into the memory area from which they are sent; that memory area is provided in the WritableMessage trait.

A CoAP server for large systems with dynamic memory management may not even need to ever err out here -- it could just grow its message buffer and set its error type to the (unstable) never_type. In a constrained system with a fixed-size message buffer, that is not an option. Moreover, while for some cases this may be client's fault and call for an error response, many such cases indicate programming errors: All the size requirements of a chunk and the options can be known, so if they don't fit in the allocated buffer, that's a programming error.

Typed messages: No runtime errors

Programming errros should trigger at compile time whenever possible. So what could that look like here? Let's dream:

fn build_response<W: WritableMessage<520>>(&self, request_data: ..., response: W) -> impl WritableMessage<0> {

let chunk = self.data.view(request_data.block);

match chunk {

Ok((payload, option)) => {

message

.set_code(code::Content)

.add_opaque_option(option::ETag, &self.etag)

.add_block_option(option::Block2, option)

.add_payload(payload)

}

Err(_) => {

message

.set_code(code::BadRequest)

.into()

}

}

}

Checking myserver v0.1.0

error[E0277]: the trait bound `impl WritableMessage<520>: WritableMessage<522_usize>` is not satisfied

--> src/main.rs:9:10

|

1 | fn build_response(&self, request_data: ..., response: W) -> impl WritableMessage<0> {

...

9 | .add_payload(payload)

| ^^^ the trait `WritableMessage<522_usize>` is not implemented for `impl WritableMessage<520>`

|

help: consider further restricting this bound

|

1 | fn build_response<W: WritableMessage<522_usize>>(...) {

error: aborting due to previous error

If the function can indicate clearly in its signature what it needs as a response buffer, static error checking can happen on all sides:

Inside the function, operations on the message can be sure to have the required space around.

Error conditions only arise when inputs need to be converted from unbounded to bounded values. They are, however, not the typical programming errors of underestimating the needed space, but reflect actual application error conditions that are now more visible and do not get buried in boilerplate error handling.

In the particular example above, the program only asked 520 bytes of memory, but would need 522 bytes in the worst case. Even with some testing, this would not have been noticed, and then suddenly fail once a file larger than 8KiB was transferred.

Outside the function, the caller can be sure to provide adaequate space, or will be notified at compile time that build_response is not implemented for too small a buffer.

How to get there

A cornerstone of tracking memory requirements in types are const generics. They are what allows creating types and traits like WritableMessage<522> in the first place.

Aside: typenum and generic-array

The typenum and generic-array crates do provide similar functionality.

Using them gets complicated very quickly, though, and debugging with them even more so.

While they do a great job with the language features available so far, const generics can provide a much smoother development experience due to their integration into the language.

The first bunch of const generics, min_const_generics, is already on the road to stabilzation; withoutboats' article on the topic summarizes well what can and what can't be done with them.

Applications like this will need more of what as I can tell is not even complete behind the const_generics feature gate: computation based on const generics will be essential in matching the input and output types of operations on size-typed buffers.

With those, methods like add_option<const L: usize>(option: u16, data: &[u8; L]) can be implemented on messages, with additional limitations on L being small enough to fit in the current message type.

Why this matters

Managing errors in this way ensures that out-of-memory errors do not come as a surprise, and enhances visibility of throse error case that do need consideration.

Furthermore, each caught error contributes to program size. Without help from the type system, the compiler can only rarely be sure that the size checks are unnecessary. Not only do these checks contribute to the machine code, they often also come with additional error messages (even line numbers or file names) generated for the handler that eventually acts on them. (This is less of an issue when error printing is carefully avoided, or a tool like defmt is employed).

Last but not least, there's a second point to in-place creation of CoAP messages: Options must be added in ascending numeric order by construction of the message; adding a low-number option later is either an error or needs rearranging the options, depending on the implementation. The same const generics that can ensure that sufficient space is available can just as well be used to statically show that all options are added in the right sequence.

Context, summary and further features

This post has been inspired by Rust Core Team's blog post on creating a 2021 roadmap.

The features I have hopes to use more in 2021 are:

- never_type, to indicate when an implementation claims to never fail,

- min_const_generics, to support numbers as a part of types, and

- more const_generics, especially with arithmetic operations.

- On the receiving side of the CoAP implementations, the generic_associated_types and type_alias_impl_trait features can simplify message parsing a lot. I have a marvellous example of this, but this summary is too narrow to contain it.

| [1] | While the examples are influenced by the coap-message crate, they do not reflect its current state of error handling. Also, they assume a few convenience functions to be present for sake of brevity. |

| Tags: | blog-chrysn |

|---|

Friday, 2020-06-26

Resuming suspend-to-disk with missing initrd

This morning two unfortunate things coincided:

- My /boot had recently run out of disk space, I did my usual workaround of removing all initrd images, and forgot to do the second step of rebuilding them. (Or I just reconfigured grub instead of initramfs-tools).

- My battery went out and the machine went to hibernation.

Consequently, the machine failed to come up at all, showing a kernel panic about being unable to find the root device.

As I have way more volatile state in a running session than I'd like, I made every effort to resume the session, which (spoilers!) eventually worked. Note that this is not a tutorial, just a report that roughly these steps worked for me.

It's worth pointing out that it's likely one only gets one shot at this. Had I gotten it half-right to the point where the init system could open the encrypted root partition but got the resume partition wrong, it would come up and invalidate any hibernation image there was. (An issue I had run into several years ago where the system would try to resume from there on a subsequent boot and wreck everything has been resolved already as far as I understood).

Common setup

The computer I recovered is running Debian GNU/Linux sid, the helper device is on stable (buster).

Both have their internal SSD (nvme0n1) partitioned into an UEFI partition, a (too small!) boot partition, and a LUKS encrypted LLVM PV that holds at least a root and a swap partition.

Initial error

Kernel panic - not syncing: VFS: Unable to mount root fs on unknown-block(0,0)

(Note that these don't come from screenshots, but from a reconstruction I did afterward, so some details might be off.)

This had me startled for a moment fearing for my disk, but the lack of a Loading initial ramdisk ... in the grub phase pointed me to the first-stage culprit: The grub entry did not have an initrd configured. (And none of the backup kernels had one either).

initrd transfusion

Fortunately, I have a second device nearby with a very similar setup. I copied its initrd to a USB stick and tried to boot from there.

echo 'Loading Linux 5.6.1-1-amd64' linux /vmlinuz-5.6.0-1-amd64 root=-/dev/mapper/hephaistos--vg-root ro quiet echo 'Manually added initrd' initrd (hd0)/initrd.img-5.6.0-0.bpo.2-amd64

No luck though -- and the initrd shell barely showed anything in /dev at all. lsmod revealed no loaded modules. Turned out that the systems were not identical enough, and the kernel versions didn't match. A second run with matching kernel versions fixed this:

echo 'Loading Linux 5.6.1-1-amd64' linux (hd0)/vmlinuz-5.6.0-0.bpo.2-amd64 root=-/dev/mapper/hephaistos--vg-root ro quiet echo 'Manually added initrd' initrd (hd0)/initrd.img-5.6.0-0.bpo.2-amd64

UUIDs

A plain boot attempt from there did not work (it waited for the other computer's disk to show up, which it knew by its UUID to start decrypting it),

At this stage, I could extract the two UUIDs I knew I would later need:

# blkid /dev/nvme0n1p3 /dev/nvme0n1p3: UUID="b47fac73-66d5-42c6-a370-f9f1dce497d1" TYPE="crypto_LUKS" PARTUUID="76773950-442c-4b81-bf70-9b9da41ec5bf" # cryltsetup luksOpen /dev/nvme0n1p3 decrypted [...] # blkid /dev/mapper/hephaistos--vg-swap_1 /dev/mapper/hephaistos--vg-swap_1: UUID="3c7f3271-f54f-41f0-8ec5-aee20977418e" TYPE="swap"

With this, I could take the USB stick back to the helper PC.

initrd fine tuning

As both the encrypted and the resume partition are named in the initrd, I tried building a suitable initrd.

On the helper PC, I changed /etc/crypttab to reflect the UUID of the NVM partition, and /etc/initramfs-tools/conf.d/resume to match the name that the encrypted swap partition would have after doing cryptsetup from the crypttab. (The swap partition's UUID did not go in there yet).

I used dpkg-reconfigure initramfs-tools to rebuild the helper's initramfs images. That may not have been a particularly wise move, but trusting that that device wouldn't need a reboot soon I took the risk temporarily. (Those images are a bit pesky to edit by hand; were that easier, I could have edited them on the USB stick and be done with it).

Fortunately, the updateinitramfs process run in that update pointed out an issue to me: It couldn't find the indicated resume device (how would it, it's on another machine), and fell back to a sane default.

That meant that resume would not work -- the gravest danger of this operation, as it would mean I'd lose the resume state.

Final startup

Fortunately, the resume partition can also be specified on the kernel command line, which is where the swap partition's UUID came in handy:

echo 'Loading Linux 5.6.1-1-amd64' linux (hd0)/vmlinuz-5.6.0-0.bpo.2-amd64 root=-/dev/mapper/hephaistos--vg-root ro quiet resume=UUID=3c7f3271-f54f-41f0-8ec5-aee20977418e echo 'Manually added initrd' initrd (hd0)/initrd.img-5.6.0-0.bpo.2-amd64

With that, my system came up as it should be.

Of course, first thing I did there was dpkg-reconfigure initramfs-tools to ensure it would come up again.

Second thing, I restored the helper PC, or I'd have needed to do the same thing the other way 'round once more later.

| Tags: | blog-chrysn |

|---|

Monday, 2018-05-07

Using TOR like a VPN to make SSH available

Back in the day, the machines I used to maintain either had public IP addresses or were local to my home network; that has changed. I still want to regularly log in to devices "on the road" by means of SSH.

The approaches I considered were:

"Use the (modern) internet": Only consider IPv6 connections where there is no NAT any more, have the devices announce themselves via some kind of dyndns (TSIG'd AXFRs seem to be the way to go)), and tell their firewalls to let SSH traffic pass (UPNP might be the way to go there).

I did not follow thisapproach because there are still backwards internet providers that don't provide IPv6, and because especially mobile providers have a tendency not to implement port opening protocols.

"Use a VPN": Set up a VPN server, assign addresses based on host certificates.

Would have worked, but I dreaded the configuration effort and running an own central server.

"Use TOR hidden services": Run TOR on all boxes and announce a hidden service that runs SSH.

A helper script and custom DNS data allow using this without any per-server configuration on the clients.

That's the approach I'm describing here.

Warning

Even though this may sound secure due to the use of TOR hidden services, it does not attempt to be. I would have been perfectly OK with just opening the SSH port to the network (relying on SSH to be secure and not needing any more authentication from the client) and announcing that address, and that's the only security level I expect. Do not follow the steps shown here without understanding what they mean and the implications on your device's security.

Server setup

On Debian, making SSH available via TOR is really straightforward:

- Install openssh-server and tor.

Configure SSH (/etc/ssh/sshd_config) in a way you want to have public-facing. All I changed from the default config was setting PasswordAuthentication no to avoid pwnage from insecure guest / testing accounts.

Start a TOR hidden service. In /etc/tor/torrc, there are already commented out examples for that. In line with them, I added:

HiddenServiceDir /var/lib/tor/hidden_service/ HiddenServicePort 22 127.0.0.1:22

After restarting SSH and TOR, the .onion name for that host can be read from /var/lib/tor/hidden_service/hostnme; I'll be using abc1234567890.onion as an example name here.

Note

It's not a fully hidden service

The service run here should not be mistaken for having any privacy properties in the sense of hiding the server. The service uses the same host key on TOR and in the LAN, so everyone who knows your host key will know who runs this servicE. toR is used only for discovery here.

TOR can probably be configured for the hidden service to only use one hop, this could increase performance and decrease the load on the TOR network; I did not get around to trying this yet.

Client setup I

For a first test, I set up my SSH client manually in ~/.ssh/config:

Host myhost.example.com

ProxyCommand nc -X 5 -x localhost:9050 abc1234567890.onion 22

After that, ssh myhost.example.com always went through TOR.

For some setups, that may be sufficient; I chose to take it a little further.

DNS setup

As I prefer not to have explicit configuration about every server on every client machine (as I would need to with the above), I decided to publish the .onion names of the hosts; the most logical choice seems to be in DNS.

To avoid confusing other services, I went for a mechanism that looks a bit like DNS-SD (but is not, as .onion addresses are not "real" host names, and SSH does not use DNS-SD anyway). In my zone file for example.com, it looks like this:

_ssh._onion.myhost.example.com IN TXT abc1234567890.onion

Those I publish with knot DNS, but any DNS server should be able to take those as static records.

Client setup II

I now only keep a single stanza in my SSH config entry:

Host *

ProxyCommand ~/.config/maybe-tunnel %h %p

This makes all connections go through an (executable) helper script of the following content:

#!/bin/sh

set -e

if getent hosts $1 >/dev/null

then

exec nc $1 $2

else

torhost="`dig +short TXT _ssh._onion."$1" | grep \\.onion | sed 's/"//g'`"

if [ x = x"$torhost" ]

then

echo "Host $1 could not be resolved, and has no _ssh._onion TXT record either." >&2

exit 1

fi

exec nc -X 5 -x localhost:9050 "$torhost" $2

fi

This script looks up the configured host name. If it resolves, it uses nc to open a direct connection, emulating what SSH does by default. (If SSH accepted dynamic configuration, I would just not set a ProxyCommand at all in this case). This covers both the case of "regular" servers that have public IPs, and also makes local connections work, because as long as I am inside the example.com LAN and the other machine is too, the internal dnsmasq server will answer the request with the local address.

If the address does not resolve, the script looks for an _ssh._onion record that would tell the address of a hidden service announced as per the above. If one is found, a connection is established using the local TOR instance (running on port 9050 by default).

Note

SSH host keys

As I use this setup right now, I either establish the hosts' identity once-per-client when I first use them in the LAN (so their public host keys get stored in my user's known_hosts file under their .example.com name), and/or I store their public keys in the known_hosts file I deploy to my boxes.

If one uses DNSSEC, it should be feasible to announce SSHFP records for myhost.example.com (even though there's no A/AAAA record for that host) and trust those.

Outlook

This does not interact well when both client and server are in the same public WiFi and could discover each other locally (they'd still go via TOR).

It would be interesting to extend this to proper DNS-SD discovery with multicast -- essentially, once a host has a known key (from TOFU or SSHFP records), whatever has no A/AAAA record would be up for local discovery, falling back to TOR based discovery.

TOR was what worked easy and fast, but its onion routing properties are not really being used here. Other schemes that "just" provide a TCP turnover point might be more convenient here. Obviously, if anything were to be usable without per-client customization, that' be great, but as SSH does not really support virtual hosting, that might be tricky.

Final words

This is an experimental setup I have just established, and am rolling out to my mobile devices; I have no practical experience with it yet.

I'd appreciate any comments on it to (preferably by mailto:chrysn@fsfe.org). I will post updates here if something significant changes.

| Tags: | blog-chrysn |

|---|

Tuesday, 2016-06-28

Obervations from playing around with a Beaglebone Black

I've toyed around with a Beaglebone Black for some days, evaluating its usefulness as a living room box. (Long story short: It's unusable for that, because it can drive only up to 720p due to pixel clock limits, lack of hardware video decoding support and lack of practical [1] 3D acceleration).

(Long-term note: This describes the state of things around 2016-06-28. In some months, this is hopefully only historically relevant, and might be shortened.)

Nevertheless, I've learned some things:

Serial Debugging is easy to connect once you have a 3.3V USB UART -- the USB UART's GND goes to J1, RX goes to J5 and TX goes to J4, where J1..6 is the pin header next to the socket bar between USB host and 5V, and only J1 is labelled.

Except for debugging, it is not really required, though; for most of what is described on the rest of this page, one can do without.

The beaglebone by default executes the U-Boot loader stored on the internal flash memory (eMMC); pressing the boot select button S2 during powerup (reset doesn't count) alters the boot sequence to skip that. This first stage of the booting process is goverened by a program in ROM that I haven't seen anyone try to manipulate yet. (Also, why should one -- it behaves sanely and not touching it makes the device unbrickable for all I can tell).

- When trying to boot from an MMC device (either the builtin eMMC called mmc0 or the microSD card called mmc1; eMMC and SD cards are rather exchangable as far as the CPU is concerned), the startup code (SPL/MLO (see later), U-Boot, U-Boot config) may either reside on the first and FAT formated partition, or at some fixed offsets between the partition table and the first partition. (So if you wind up with arguments in the kernel cmdline you fail to find in the mounted image, have a look at the intermediate space with a hex editor).

- Failing that (eg. because no microSD card is inserted and S2 was pressed), the ROM fires up an Ethernet interface via the USB gadget side (ie. when the mini-USB port is connected to a PC, a new USB network card appears there) and asks for the SPL/MLO via bootp.

The Alexander Holler's boot explaination image visualises this well.

Both the MMC and the USB networking boot flow take an intermediate step (the secondary program loader, SPL; file name MLO) before loading full U-Boot (file name u-boot.img or similar). The SPL is actually a U-Boot itself, just stripped down to run from on-board RAM; it then initializes the rest of the RAM and loads the fully configured U-Boot (with debug prompt and device support for everything else) to continue.

It is possible to do the entire flashing without possession of a microSD card, but it's not a widespread way of operation and thus you might encounter some bugs.

The following workflow shows how to flash the provided Debian image right into eMMC.

Obtain a copy of the compressed image file from the latest-images page.

Get a copy of the spl, uboot and fit files from the BBBlfs bin/ directory. (There's a copy inside the image at partition 1 in /opt/source/BBBlfs, that should work as well and can be extracted using kpartx and loop mounting). Rename fit to itb because that's the file name needed later.

Prepare a DHCP server for the incoming USB connections. I generally find it practical to have a device independent Ethernet setting which I call "I am a WiFi adapter" in NetworkManager, which is configured to have IPv4 "Shared to other computers" -- setting that to "automatically connect to this network when it is available" for the time of playing around is one of the easiest ways to get a DHCP server running.

Tell the server to ship the required images by creating a /etc/NetworkManager/dnsmasq-shared.d/spl-uboot-fit.conf file, which modern NetworkManager versions include to every started DHCP server, or include the sniplet into your dnsmasq config if you start it manually:

bootp-dynamic enable-tftp tftp-root=/where/you/stored/last/steps/files dhcp-vendorclass=set:want-spl,AM335x ROM dhcp-vendorclass=set:want-uboot,AM335x U-Boot SPL dhcp-boot=tag:want-spl,/spl dhcp-boot=tag:want-uboot,/uboot

This will serve the right file at the right time.

Wire up the BeagleBone's mini-USB to your workstation and keep S2 pressed while plugging in. You should see NetworkManager take the interface up and set it to "I am a WiFi adapter" (or manually do that if you didn't configure autoconnect).

If the connection fails to come up / disappears, check your NetworkManager log files (eg. at /var/log/daemon.log); chances are that dnsmasq does not come up because it can't access the TFTP root path. If it does come up, it should disappear again soon afterwards, and you should see a line like

dnsmasq-tftp[...]: sent /some/path/bin/spl to 10.42.0.x

which indikates that the first stage loader asked as expected and got its response. Following that, a new USB network interface should appear, and you should see the same line just with uboot instead of spl. (Without autoconnect, you'd have to nudge NetworkManager again).

Due to what I consider a bug in BBBlfs, we need to take a detour (see bug details on the why, this is just the how of the workaround):

sudo /etc/init.d/network-manager stop sudo ip address add dev enp0s20u1c2 192.168.1.9/24 sudo dnsmasq --no-daemon --port=0 --conf-dir=/etc/NetworkManager/dnsmasq-shared.d

Once that too shows a "sent" line, end dnsmasq with Ctrl-C; you can start NetworkManager again. (There's a timeout of guesstimated one minute in this step, after which the cycle starts from the beginning. If you don't get a "sent" line from the dnsmasq, check whether you properly renamed the fit file.)

You should now see a new block device (/dev/sdc for me) appear, which represents the eMMC flash memory on the BeagleBone. If you have a serial console attached, you are dropped in a linux root shell on the BeagleBone. (What is running there is actually "just" a linux kernel with a g_ether module configured to expose /dev/mmcblk0).

With that, you can flash images onto eMMC (follow the steps further) or mount and modify existing installations (eg. change the root password you were sure not to forget and have no clue about a year later).

You can now flash the image using sudo dd if=bone-debian-8.4-lxqt-4gb-armhf-2016-05-13-4gb.img of=/dev/sdX -- and double-check whether you're writing to the correct sd-whatever device, dd is short for "disk destroyer".

The current images have a (uboot config?) bug that results in the wrong root= argument being passed to the kernel. This can be circumvented by telling U-Boot to use disk UUIDs instead of device names [2]:

Mount /dev/sdc1 (current bone Debian images have exactly one partition) and edit its /boot/uEnv.txt. There should be a commented-out line uuid=; remove the comment mark (#) and append the UUID of the partition obtained by the blkid /dev/sdc1 command.

Unmount the disk, wait until the LEDs on the bone only flicker regularly, and then power-cycle it. The device should now boot into a full Debian that can be used from an HDMI attached monitor and USB mouse / keyboard.

| [1] | There is only a non-free driver for the SGX/PowerVR builtin 3D accelerator, but that means I'd need to wait for vendor provided drivers for each kernel update the device would see during its regular lifetime and then still not retain full kernel support. |

| [2] | The images' bug tracker requires administrator approval for login, until then here's what it'll say:

|

| Tags: | blog-chrysn |

|---|

Wednesday, 2015-03-18

Switching terminal emulator: urxvt to xfce4-terminal

I have just switched my terminal from urxvt to xfce4-terminal.

Some considerations that led to the decision:

- urxvt's annoying Ctrl-Shift behavior: Just because I want Unicode displayed, I don't necessarily want to use all the input methods, which get in one's way and are hard to disable. 393847 is a workaround, but not much help for 256 color users. (That was what pushed me over the switching inertia.) If I want to input 🂭, I use gucharmap.

- Switching font sizes is easy with Xfce terminal.

- Standard desktop software: As much as I like my custom desktop, I try to stick to non-exotic components where practical; Xfce is available on most desktops around me.

- I don't need terminal features like scrollback or tabs, because I use tmux both locally and remote for scrollback and multiple shells in a single window.

- Both feel equally snappy, provide support running a single process for many windows, support 256 colors, can be configured not to show anything but the text, and behave well with respect to re-wrapping text (at least when used with tmux).

Monday, 2013-03-04

ARandR 0.1.7.1 released

…with the following changes since 0.1.4 (the intermediate releases were not important enough to get own release announcements):

- Merged parts of the cglita branch

- solves ValueError / "1080p" issue (Debian bug #507521)

- Fix the 'primary' issue

- ignores the primary keyword

- makes ARandR compatible with xrandr 1.4.0

- Show the entire output submenu as disabled instead of the "Active" checkbox

- New unxrandr tool

- New translations:

- Bosnian (by Semsudin Abdic)

- Breton (by Belvar)

- Dutch (by wimfeijen)

- Galician (by Miguel Anxo Bouzada)

- Greek (by Dimitris Giouroukis)

- Hungarian (by Tamás Nagy)

- Japanese (by o-157)

- Korean (by ParkJS)

- Lithunian (by Algimantas Margevičius)

- Persian (by Alireza Savand)

- Romanian (by sjb and Себастьян Gli ţa Κατινα)

- Slovak (by Slavko)

- Swedish (by Ingemar Karlsson)

- Ukrainian (by Rax Garfield)

- Updated translations:

- French (by Bruno Patri)

- Lithuanian (by Mantas Kriaučiūnas)

- Persian (by Alireza Savand)

- Spanish (by Miguel Anxo Bouzada)

- Other small bugfixes

Saturday, 2012-08-18

Window manager changed to i3: scratchpad tricks

Sticking to what seems to be my two-year window manager changing schedule, I switched again and ended up with i3. Xmonad was ok, performance- and feature-wise, but I didn't find the time to learn Haskell to be really able to configure it properly.

i3 has some properties I particularly like:

- The way it handles multi-monitor setups: Every workspace gets assigned to an output; switching to a workspace on another output opens the workspace there and warps the focus to that output.

- Layouting is so much more flexible, though I still somewhat miss my Super+Return "swap the current window with the big one" of Xmonad.

- The scratchpad: a place to send a window where it can easily be recalled as a popup to the desktop. To be fair, it should be possible to implement the same thing with Xmonad or awesome, but here, it's usable without learning a whole language.

I use the scratchpad as a more powerful replacement for dmenu_run, which under high IO load can take long time to fire up: I keep a terminal with tmux ready at the scratchpad, which is configured to hide itself again as soon as a command is executed, and to spawn another tmux window to be ready for the next input.

Usability-wise, it's like running dmenu or gmrun (which I used some time ago): You press Super-p, a window shows up, you enter your command, press Return, the window vanishes and the command gets executed. In addition, it offers

- a real shell, with all its tab completion powers, aliases etc., and

- a way to recover started processes' stdout/err data, even if they crash (the windows are kept around for some minutes).

The code for that is pretty short, and most of it is to cover corner cases. This file is ~/.config/i3/scratchpad/perpetual_terminal.sh and is best executed from i3 under a flock guard:

(download)

#!/bin/sh

run_inside="'ZDOTDIR=${HOME}/.config/i3/scratchpad zsh'"

while true; do

# not using urxvtc here, as we're relying on the process to run

# until either

#

# * it gets detached (eg by ^Ad)

# * it terminates (because someone killed all windows)

#

# in any case, we try to reattach to the session, or, if that fails,

# create a new one.

urxvt -name scratchpad -e sh -c "tmux attach -t scratchpad || tmux new-session -s scratchpad $run_inside ';' set-option -t scratchpad default-command $run_inside"

done

And this file, ~/.config/i3/scratchpad/.zshrc, configures the shell that gets executed:

(download)

source ~/.zshrc

# reset again what was set outside

export ZDOTDIR=${HOME}

PS1="(scratchpad)$PS1"

preexec() {

i3-msg '[instance="^scratchpad$"]' move scratchpad

tmux new-window

precmd() {

echo "=============================="

echo "exited with $?"

exec sleep 10m

}

}

zshexit() {

# user pressed ^D or window got killed, will not be executed when the

# shell gets "killed" by the final exec in the regular workflow

i3-msg '[instance="^scratchpad$"]' move scratchpad

tmux new-window

}

TRAPHUP() {

# when the window gets killed, just die

zshexit() {}

}

To activate this from i3, the following lines are placed in the i3 config:

bindsym $mod+p [instance="^scratchpad$"] scratchpad show exec_always flock -w1 ~/.config/i3/scratchpad/lockfile ~/.config/i3/scratchpad/perpetual_terminal.sh for_window [instance="^scratchpad$"] move scratchpad

All of the above requires zsh, tmux and urxvt to be installed, zsh to be configure as login shell and possibly other things I might have forgotten (please let me know if you have trouble reproducing this setup).

Other i3 features that look good and I plan to discover:

- Easy to use modes -- maybe I'll revive my key combos of old (Super+Space, g, P for "games / pioneers server", Super+Space, Print, u for "take a screenshot, upload it to my server and put the URL into my clipboard" etc.

- VIM-like marks

An issue I yet have to find a solution for is the status bar: I want nice graphs of CPU usage etc, so the tiling window manager typical solutions like dzen2 or i3's i3status/i3bar won't do the trick. I'm using conky for the moment, but I still need i3bar because it has a realtime display of the active workspace and of urgency notifications. Ideally, I'd want i3bar to provide an X window to conky where it can draw, but for the moment, I'm keeping a single statusline worth of visual information in two distinct panels.

Saturday, 2010-12-11

ARandR 0.1.4 released

This is a bugfix / translation update release with the following changes since 0.1.3:

- Fix for "unknown connection" bug

- New translations:

- Russian (by HsH)

- French (by Clément Démoulins)

- Polish (by RooTer)

- Arabic (by Mohammad Alhargan)

- Turkish (by Mehmet Gülmen)

- Spanish (by Ricardo A. Hermosilla Carrillo)

- Catalan (by el_libre)

- Chinese (by Carezero)

- Translation updates:

- Danish

- Brazilian

- Arabic (by aboodilankaboot)

I am sorry to disappoint those that wait for the "next big" release (with enhanced parsing to fix #507521 and support for xrandr 1.3 features like --primary) – while those issues are as important as the bug fixed here, this was a "low hanging fruit" and a welcome occasion to do a release with all the new translations. Big thanks to all translators!

Tuesday, 2010-09-07

Window manager changed to xmonad

After having used awesome for more than two years, I just switched to xmonad. Main reasons for the change were the configuration mess (which rendered my carefully crafted CPU and memory indicators useless in one of the last major upgrades) and the way awesome handles multi-monitor setups (boils down to reloading after adding or removing an output).

The packages I use are:

xmonad

suckless-tools # provides dmenu-run configured on Win-P

gmrun # confiured on Win-Shift-P

dzen2 # menu bar

# needed for custom configuration:

libghc6-xmonad-dev

libghc6-xmonad-contrib-dev

My xmonad configuration looks like this:

(download)

import XMonad

import XMonad.Hooks.DynamicLog

import XMonad.Hooks.ManageDocks

import XMonad.Util.Run(spawnPipe)

import XMonad.Util.EZConfig(additionalKeys)

import System.IO

import qualified Data.Map as M

import XMonad.Actions.CycleWS

import XMonad.Actions.CycleRecentWS

import XMonad.Hooks.DynamicLog (PP(..), dynamicLogWithPP, wrap, defaultPP)

import XMonad.Hooks.UrgencyHook

-- keybindings: similar to the awesome default config i got used to

-- ---------------------------------------------------------------------------

myKeys conf@(XConfig {XMonad.modMask = modm}) = [

((modm, xK_Down), nextScreen),

((modm .|. shiftMask, xK_Down), shiftNextScreen),

((modm, xK_Up), prevScreen),

((modm .|. shiftMask, xK_Up), shiftPrevScreen),

((modm, xK_Left), prevWS),

((modm .|. shiftMask, xK_Left), shiftToPrev),

((modm, xK_Right), nextWS),

((modm .|. shiftMask, xK_Right), shiftToNext),

((modm, xK_Escape), toggleWS),

((modm .|. shiftMask, xK_b), spawn "epiphany -p")

]

newKeys x = M.union (keys defaultConfig x) (M.fromList (myKeys x))

-- main program

-- ---------------------------------------------------------------------------

main = do

statusBarPipe <- spawnPipe statusBarCmd

xmonad $ withUrgencyHook dzenUrgencyHook { args = ["-bg", "darkgreen", "-xs", "1"] }

$ defaultConfig {

modMask = mod4Mask, -- windows key

terminal = "uxterm",

manageHook = manageDocks <+> manageHook defaultConfig, -- autodetection for gnome panel and similar

layoutHook = avoidStruts $ layoutHook defaultConfig, -- adds gaps for panels

keys = newKeys,

logHook = dynamicLogWithPP $ myPP statusBarPipe

}

-- status bar on top

-- ---------------------------------------------------------------------------